During my pediatrics residency, I built a pretty sizable content management system to host educational and curricular material for my department, a system that’s remained in operation for quite a while now. Over the past few months, though, two senior pediatricians (and regular content contributors) let me know that they were unable to log into the system from home; from their descriptions, they were presented with the login form, entered their information and submitted it, and were immediately returned to the same form without any errors. On its face, it made no sense to me, and made even less sense given the fact that there are a hundred or more regular users who weren’t having any problems logging in. The fact that two people were having the same problem, though, made it clear that something was breaking, so I started taking everything apart to see where the problem was rooted. (This was of particular interest to me, since I use the same authentication scheme in a few other web apps of mine, some of which contain patients’ protected health information.)

Looking at the mechanism I had built, the system takes four pieces of information — the username, password, client IP address, and date and time of last site access — and combines them into a series of cookies sent back to the user’s browser in the following manner:

- the username is put in its own cookie;

- the username, password, and client IP address are combined and put through a one-way hash function to create an authentication token, a token that’s put into a second cookie;

- finally, the date and time of the last site access is put into a third cookie.

To me, all four pieces of information represent the minimum set needed for reasonable site security. The username and password are obvious, since without them, anyone could gain access to the site. The client IP address is also important for web-based applications; it’s the insurance that prevents people from being able to use packet sniffers, grab someone else’s cookie as it crosses the network, and then use it to authenticate themselves without even having to know the password (a type of playback attack known as session hijacking). (This isn’t perfect, given the widespread use of networks hidden behind Network Address Translation as well as the feasibility of source IP address spoofing, but it’s a pretty high bar to set.) And finally, incorporating the date and time of a user’s last access allows me to implement a site timeout, preventing someone from scavenging a user’s old cookies and using them to access the site at a later time.

Looking at that system, I struggled to find the bit that might be preventing these two users from being able to log in at home. I already had a check to see if the user’s browser allowed cookies, so I knew that couldn’t be the problem. These same two users were able to log into the site using browsers at the hospital, so I knew that there wasn’t some issue with their user database entries. That left me with a bunch of weird ideas (like that their home browsers were performing odd text transformation between when they typed their login information and when the browser submitted it to the server, or that their browsers were somehow modifying the client IP address that was being seen by my application). None of that made any sense to me, until I got a late-night email from one of the two affected users containing an interesting data point. He related that he was continuing to have problems, and then was able to log in successfully by switching from AOL’s built-in web browser to Internet Explorer. (He has a broadband connection, and mentioned that his normal way of surfing the web is to log onto his AOL-over-broadband account and using the built-in AOL browser.) When the other affected user verified the same behavior for me, I was able to figure out what was going on.

It turns out that when someone surfs the web using the browser built into AOL’s desktop software, their requests don’t go directly from their desktop to the web servers. Instead, AOL has a series of proxy machines that sit on their network, and most client requests go through these machines. (This means that the web browser sends its request to a proxy server, which then requests the information from the distant web server, receives it back, and finally passes it on to the client.) The maddening thing is that during a single web surfing session, the traffic from a single client might go through dozens of different proxy servers, and this means that to one web server, that single client might appear to be coming from dozens of different IP addresses. And remembering that the client IP address is a static part of my authentication token, the changing IP address makes every token invalid, so the user is logged out of their session and returned to the login page.

Thinking about this, it hit me that there are precious few ways that an authentication scheme could play well with AOL’s method of providing web access. For example:

- The scheme could just do away with a reliance on the client’s IP address; this, though, would mean that the site would be entirely susceptible to session hikacking.

- The scheme could use a looser IP address check, checking only to make sure the client was in the same range of IP addresses from request to request; this would likewise open the site up to (a more limited scope of) session hijacking, and would be a completely arbitrary implementation of the idea that proxy requests will always take place within some generic range of IP addresses. (Of note, it appears this is how the popular web forum software phpBB has decided to deal with this same problem, only checking the first 24 bits of the IP address.)

- The scheme could replace its checks of the client IP address with checks of other random HTTP headers (like the User-Agent, the Accept-Charset, etc.); to me, though, any competent hacker wouldn’t just playback the cookie header, he would play back all the headers from the request, and would easily defeat this check without even knowing it.

- Lastly, the scheme could get rid of the client IP address check but demand encryption of all its traffic (using secure HTTP); this would work great and prevent network capture of the cookies, but would require an HTTPS server and would demand that the people running the app spend money annually to get a security certificate, all just to work around AOL’s decision on how the web should work.

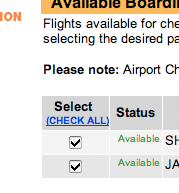

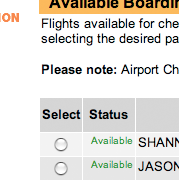

In the end, I added a preference to my scheme that allows any single application to decide on one of two behaviors, either completely rejecting clients that are coming through AOL proxy servers (not shockingly, the way that many others have decided to deal with the problem), or allowing them by lessening the security bar for them and them alone. I check whether a given client is coming from AOL via a two-pronged test: first, I check to see if the User-Agent string contains “AOL”, and if it does, I check to see if the client IP address is within the known blocks of AOL proxy servers. If the client is found to be an AOL proxy server, then (depending on the chosen behavior) I either return the user to the login page with a message that explains why his browser can’t connect to my app, or I build my authentication token without the client IP address and then pass the user into the application.

Finding myself in a situation where users were inexplicably unable to access one of my web apps was reasonably irritating, sure, but the end explanation was way more irritating. Now, I have to maintain a list of known AOL proxy servers in all my apps, and potentially, I have to get involved in teaching users how to bypass the AOL browser for access to any apps that require the stronger level of security. Of course, it’s also helped me understand the places where my authentication scheme can stand to be improved, and that’s not all that bad… but it still makes me want to punish AOL somehow.